Moving This Site to AWS

2020-12-27

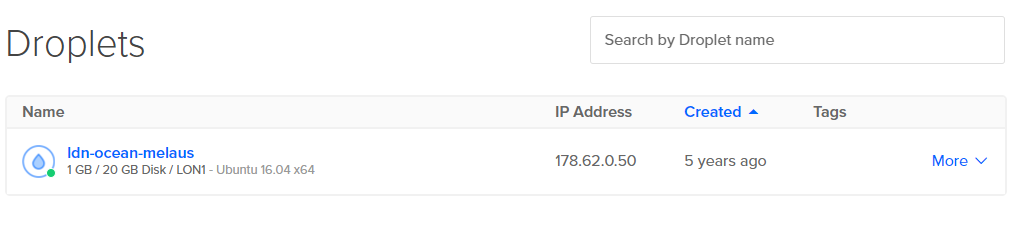

As you might have recalled, I hosted this website on an instance on DigitalOcean. Whilst it has served this static site well, I have barely touched the setup for years (5 years to be exact!)

Whilst I admire its stability, an instance that's been running for 5 years (bar various restarts)... It's rogue!

Whilst I admire its stability, an instance that's been running for 5 years (bar various restarts)... It's rogue!

Not only that, all the setup was done directly on the server. Ask me how I set up certbot and Let's Encrypt certificates, or the nginx settings, or how I got a python service (github-top5) running on the box, I haven't a single clue...!

Now that I have a few years of software engineering knowledge under my belt, it's scary to think I did such rogue things. Time to change this!

And this is a great opportunitiy. Celebrations aside (Covid or not), I had other plans over this festive season, but things have changed (🎉). So, I've got some time to work on personal side projects like this!

🕸️Website

There's so many things I need to clean up about this website - the redundant code, the unncessary plugins, the hack-together stuff... Working as a full time software engineer really help to piece things together.

Pelican, the static site generator I use, seems to have evovled slightly as well, though there are many more new engines that's backed by golang or javascript these days. So if I had to make changes to the website itself, I might look at one of these new build engines - hugo in particular.

But all of this is for another time. I want to focus on getting the infrastructure right before making these changes.

Rather than using an instance on DigitalOcean, The idea is we'll set the site running on AWS with services such as S3, Cloudfront, and Amazon-issued certificates for TLS connections.

🏗️ Infrastructure

🔨 AWS

The world of cloud computing has moved on since 2015, and AWS offers a lot more to serve this simple site. For example, I don't need a full fledged virtual machine to run several tens of megabytes of a static website. I also don't need the trouble to set up nginx and or sign SSL certs for HTTPS access using Let's Encrypt.

In particular, I'm going to use S3 (to store the static site), Cloudfront (to serve via melaus.xyz) and ACM (to manage Amazon-signed SSL certificate).

🔨 Github Actions

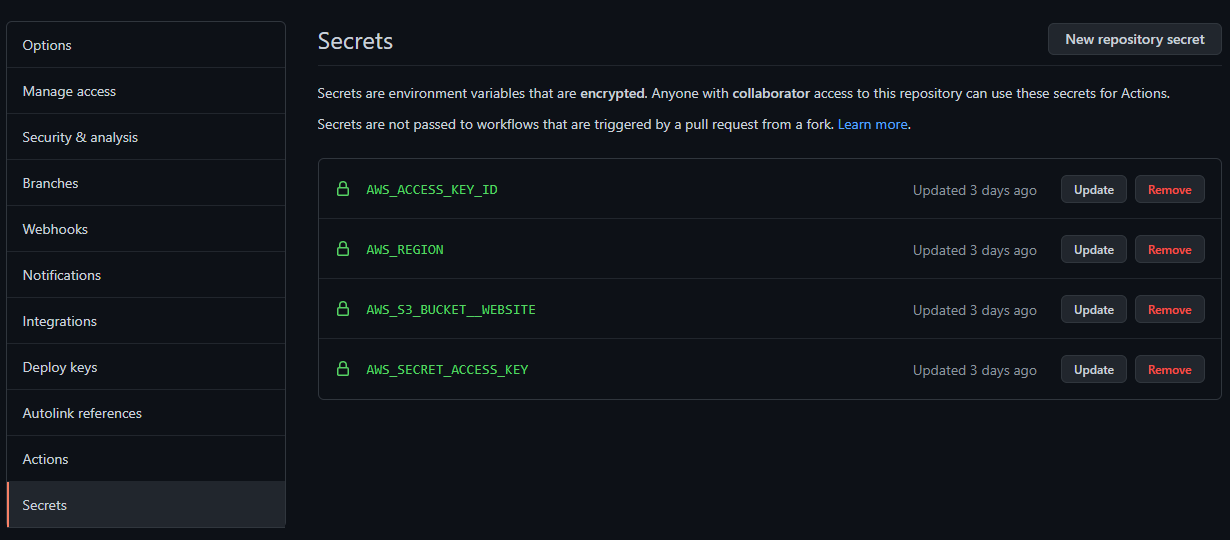

You can set up encrypted secrets in Github repo easily, and reference them as environment variables later in the build steps

You can set up encrypted secrets in Github repo easily, and reference them as environment variables later in the build steps

To keep things simple, I'm going to build the project using Github Actions. I already pay for Github Pro which includes some free Github Actions allowance per month. There's really no point in setting up yet another tool for building a relatively small side project.

This also means that I have a consistent build environment and steps with all the required packages and libraries. After all, the idea of this whole migration is consistency and replicability.

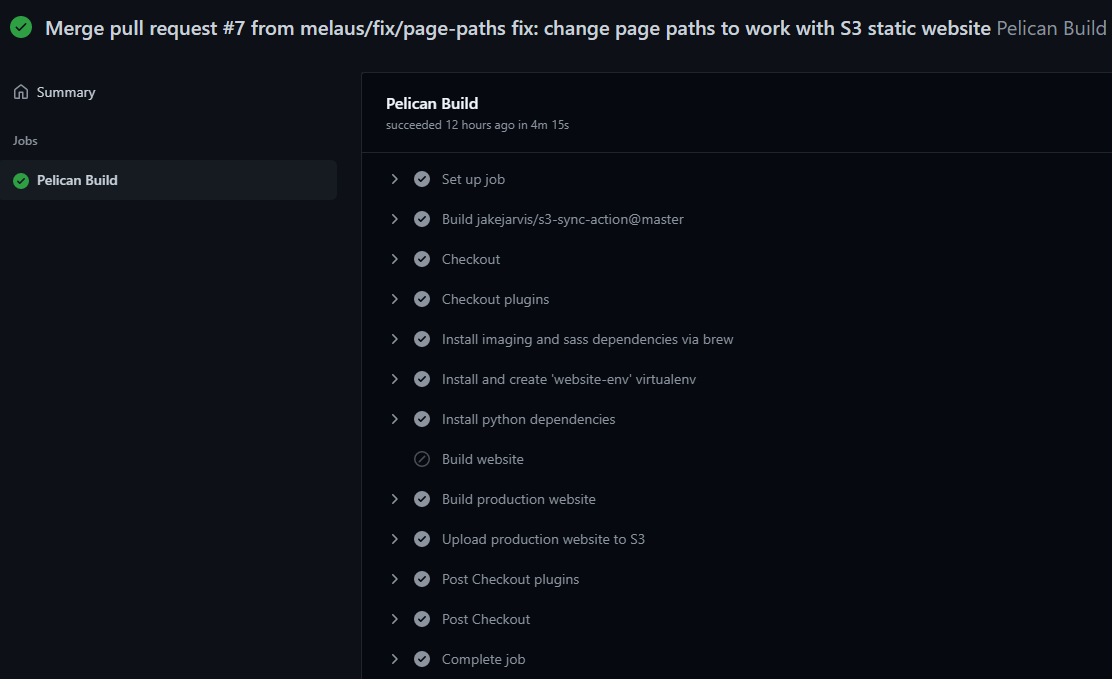

Builds are clearly visualised and can be debugged easily

Builds are clearly visualised and can be debugged easily

🔨 Terraform

I've learnt my lesson, so no more manual set up! Terraform is a great tool to ensure that your setup works and replicable.

If work has taught me anything, Terraform could be painful (Terribleform as I sometimes refer it as...😂) but I think it's worth doing. If anything, I need some devops practice!

Setup

There's some basic set up required so that Terraform knows what platform it's dealing with. So in a main.tf file, I declare an aws provider (see Terraform AWS Provider)

I also declare an s3 backend (see Terrforam s3 backend to keep track of the state of Terraform.

Note that I created the s3 bucket to store the state manually. Perhaps there's a way to orchestrate this via terraform as well, but using Terrafrom to set up Terraform seems a bit unintuitive to me...

// main.tf

provider "aws" {

// set environment variables: AWS_ACCESS_KEY_ID, AWS_SECRET_ACCESS_KEY, AWS_DEFAULT_REGION

}

terraform {

backend "s3" {

bucket = var.bucket_name

key = var.tfstate_filename

encrypt = true

}

}Here are the Terraform resources I used for setting up all things AWS:

- S3 buckets for website

- aws_s3_bucket

resource - aws_iam_policy_document

data - aws_s3_bucket_policy

resource

- aws_s3_bucket

// by using 'aws_iam_policy_document',

// you can reference the policy as opposed to having to paste a json blob

resource "aws_s3_bucket_policy" "website_s3_bucket_policy" {

bucket = aws_s3_bucket.website-s3.bucket

policy = data.aws_iam_policy_document.website_s3_iam_policy.json

}- Cloudfront setup

- aws_cloudfront_distribution

resource - aws_acm_certificate

resource - aws_acm_certificate_validation

resource - aws_route53_record

resourceFor cert validation

- aws_cloudfront_distribution

resource "aws_route53_record" "cdn_cert_validation" {

name = element(aws_acm_certificate.cdn.domain_validation_options.*.resource_record_name, 0)

type = element(aws_acm_certificate.cdn.domain_validation_options.*.resource_record_type, 0)

zone_id = var.zone_id

records = [element(aws_acm_certificate.cdn.domain_validation_options.*.resource_record_value, 0)]

ttl = 60

}- Route53 and DNS record setup

- aws_route53_zone

resource - aws_route53_record

resource

- aws_route53_zone

A note on the ACM cert: use the certificate_arn from aws_acm_certificate_validation for the aws_cloudfront_distribution cert set up. This will ensure that Terraform will wait until the cert is verified before continuing.

Caveats

It all seems rather straight forward. However several things caught me out:

🤔DNS transfer from DigitalOcean to AWS

- This took quite a while. I thought my Terraform setup was wrong, but no, it did take nearly half an hour before the transfer was complete.

🤔ACM certificate

- They must be issued in the zone

us-east-1. This took me a whilst to figure out. This can be done by setting and using a Virgina aws provier.

// set a new provider with zone 'us-east-1' (Virginia)

provider "aws" {

alias = 'virginia'

region = 'us-east-1'

}

// set your `aws_acm_certificate_validation` and `aws_acm_certificate`

// to use the Virginia provider

resource "aws_acm_certificate" "cdn" {

provider = aws.virginia

// ...

}

resource "aws_acm_certificate_validation" "cdn_cert" {

provider = aws.virginia

// ...

}🤔Hitting melaus.xyz gave me access denied despite obejcts in S3 bucket set with public-read

- After some digging, you can set

default_root_objecttoindex.htmlon theaws_cloudfront_distributionresource.

resource "aws_cloudfront_distribution" "cdn" {

// ...

default_root_object = "index.html"

// ...

}🤔I added melaus.xyz and www.melaus.xyz DNS records, but hitting www.melaus.xyz gave me ERR_SSL_PROTOCOL_ERROR

- This took me the longest! I signed my certificate with

melaus.xyzas domain name. Realising that I also need to capturewww.melaus.xyzand other*.melaus.xyzsubdomains. - Number 2, Cloudfront also needs to be set so it know which domain it'd accept to reach the S3 bucket.

// Number 1 - add 'subect_alternative_names' so that the cert is signed for any subdomains

resource "aws_acm_certificate" "cdn" {

provider = aws.virginia

domain_name = var.domain_name

validation_method = "DNS"

subject_alternative_names = ["*.${var.domain_name}"]

}

// Number 2 - set aliases on Cloudfront so that it accepts `www.melaus.xyz`

resource "aws_cloudfront_distribution" "cdn" {

// ...

aliases = [var.domain_name, "www.${var.domain_name}"]

// ...

}VIOLA!

☑️ It Works!

Site is now fully on AWS and all working!

Next steps:

- Remove unneccesary code

- Explore and compare new static site generator with Pelican

- Simplify build steps even further with Github Actions

- Write more articles on this site!